Recently, the so-called generative artificial intelligence (GenAI) has received great attention. It is capable of generating new data, images, text or other content based on patterns or input, to quote ChatGPT. Or put another way, it can create new data such as text, images, videos, and music. It relies on machine learning algorithms that allow it to analyze existing data and then generate new data that is similar to the original data, following Google Bard’s definition.

These language models are often treated by users as an “oracle that knows the answers to all questions.” The creators of these solutions allow their tools to describe and review almost all spheres of our lives, including our emotions, and users take advantage of this eagerly.

But are these descriptions, answers to questions and reviews fully reliable, especially in terms of emotions? Let’s check it out!

Testing the efficiency of generative AI

A team of scientists from the department of linguistic engineering at Wroclaw University of Technology, which also included scholars working on our solution “Sentimenti – an analyzer of emotions in text,” subjected ChatGPT and other available language models (including sentiment analysis systems) to a comparative study.

As the study by the Wroclaw team showed, GenAI performed the worst on pragmatic tasks requiring knowledge of the world and precisely on emotion assessment.

Our tests at Sentimenti

Measuring sentiment, emotion and emotional arousal is the essence of Sentimenti. That’s why we were tempted to take a simple test to check.

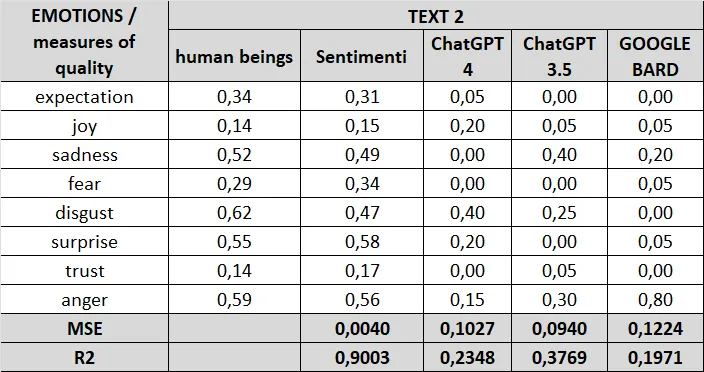

We analyzed four randomly selected texts using “Sentimenti analyzer”; ChatGPT 4; ChatGPT 3.5 and GOOGLE BARD for the content of emotions they emanate. As a unit of measurement of the quality of the performance of the indicated programs was adopted:

- MSE (mean squared error) – mean squared error as a coefficient of error evaluation (which is the smaller, the more favorable for the software under test) and

- R² – the quality coefficient of the model fit (the larger its value, the better the fit of the software under test).

The benchmark for checking the quality of the performance of the selected programs was the results of the emotive evaluation of the texts. There were selected for analysis carried out on respondents (people) participating during the development of our emotion analyzer. Since the emotion values presented by the ChatGPT 4, ChatGPT 3.5 and Google Bard programs are shown in two decimal places, we made analogous rounding in the “Sentimenti analyzer” and in the results of the survey conducted on respondents.

Randomly selected texts, subjected to AI analysis

Text 1

As I have stayed at this hotel many times, my review will not change much. Underneath the rather ugly and neglected exterior of the hotel, there is a nicely renovated interior and friendly staff. The rooms are clean, bright and quite spacious. Breakfasts are excellent. Unfortunately, the restaurant menu, as in all Novotels, is terrible, and the dishes are modest, unpalatable and very expensive. Fortunately, there are many places in Krakow where you can eat well. I definitely do not recommend the restaurant, but the hotel as much as possible. It would be worthwhile to think about renewing the body of the hotel, as it is from a different era. It was the same in my student days, 25 years ago!!!!

Text 2

The hotel in an ideal location as far as the boat is concerned, two minutes from Piotrowska in the center, but as far as cleanliness is a tragedy the bathroom stank and the bedding dirty, for this class of hotel it is unacceptable

Text 3

The hotel only for a one-night stay for business and nothing else. After a recent stay of several days with family, we decided that this would be our last visit to this hotel. Food standard for all Ibis, rooms also. Service at the reception – roulette. Upon checking in, we were given perhaps the smallest corner room in the entire hotel despite a prior phone reservation for specific rooms on specific floors, supposedly due to occupancy. By a strange coincidence, a few minutes later during service by another receptionist our rooms were found.” In the rooms not very clean, housekeeping does not pay attention to the types of water put despite numerous requests. The restaurant leaves much to be desired.”

Text 4

The hotel is located near the center of Wroclaw. Room and bathroom clean, toiletries ok, maybe a little too soft bed, but it was not bad. Breakfast quite good. Parking additionally paid 25 zl. On a very big plus I wanted to evaluate the service. At the reception everything efficiently and with a smile. However, during breakfast, one of the waitress ladies surprised me very positively. She very quickly organized a table for 8 people, immediately brought a feeding chair for the baby, asked the children how they liked Wroclaw, etc. At one point my 7-year-old daughter asked if there was ketchup to which the lady with a smile: sure there is, I’m already going to bring you. A few words and gestures and it makes an impression.

Results

A simple test conducted confirmed the conclusions of the Wrocław researchers that when it comes to analyzing emotions, chatbots are significantly inferior to specialized programs such as “Sentimenti.”

The starting point is the results on individuals (Respondents) and we compare the other models to them: Sentimenti, ChatGPT 4.0, ChatGPT 3.5 and Google Bard.

As can be seen in the case of Sentimenti Analyzer, the values of the MSE and R² indicators are significantly better than those of the other programs.

MSE indicator – in the case of the “Sentimenti analyzer” its value is measured in the third and even in the fourth decimal place, showing the approach of the error (in the operation of the analyzer) to zero. The situation is different in other programs, where the value of this indicator is measured in the second and even in the first decimal place, showing a large area of error in the operation of these programs.

R² index – in the case of the “Sentimenti analyzer,” the values of this index range from 0.8217 to 0.9563, where the range 0.8 – 0.9 is defined as a good fit, and the range 0.9 – 1.0 as an excellent fit.

For the remaining programs, the highest R² index measures for:

- ChatGPT 4.0 – 0,3981

- ChatGPT 3.5 – 0,3769

- GOOGLE BARD – 0,4609

indicate an unsatisfactory fit.

GenAI hallucinations

There is another problem with generative models that insightful analysts point out. This is the tendency for the program to throw out erroneous information.

If this phenomenon, referred to as “hallucinations,” were a recurring one, there is a danger that ChatGPT, the next time it is asked about the level of sentiment for a certain financial asset, could give a completely different answer than it originally gave. In such a case, the investment strategies adopted by investors would be fed with erroneous data that could affect the results of financial transactions.

There is no such danger with the “Sentimenti analyzer”. Here, regardless of the number of queries about the level of emotions in a particular (same) text, we get identical results every time.

GenAI vs. Sentimenti in financial markets.

As part of the Sentistocks project, we use emotion intensity measurement to predict the future values of selected financial instruments. Measuring the intensity of emotion allows us to determine where the mood of the market, commonly referred to as investor sentiment, is headed.

Correlating the intensity of this indicator (built, after all, based on the emotions that investors feel) with financial data makes it possible, using learned models, to predict the future values of financial instruments. Currently, the model analyzes the sentiment of the cryptocurrency market (prediction of Bitcoin price in 15-minute intervals).

There are also reports of ChatGPT being used to predict stock market returns using sentiment analysis of news headlines.

As the authors of the cited publication point out:

…analysis shows that ChatGPT sentiment scores show statistically significant predictive power on daily stock market returns. Using news headline data and sentiment generation, we find a strong correlation between ChatGPT scores and subsequent daily stock market returns in our sample.

Noteworthy is the phrase in the publication:

ChatGPT sentiment scores show statistically significant predictive power.

Our brief test for ChatGPT (4.0 and 3.5) showed that the quality measures (MSE and R²) for these programs used to measure emotions that account for sentiment and its direction are unsatisfactory. And yet, the authors of the referenced publication obtained positive results from their study.

One might then ask how much better the results would have been if our Sentimenti analyzer had been used to measure investor sentiment? Where, in particular, the R² ratio was more than twice as high for our solution as we recorded it for ChatGPT?

Summary

Trying to be objective in our simplistic comparison, we unfortunately see a number of risks or even exclusions for using generative artificial intelligence models in professional applications, especially in areas based on sentiment and emotion measurement. In our opinion, this is due to both the design of the solution itself, the source data provided for model learning, and the concept of sentiment and emotion measurement itself.

In particular:

- differences in the construction of language models: the Sentimenti model was built from scratch based on data obtained from perhaps the largest research of its kind in the world, with human participants. Such prepared and targeted research usually results in a narrowed functionality. However, with, at the same time, a much higher quality of results compared to the approach of acquiring data in fact from every possible area to provide the model with as much “knowledge” as possible.

- differences in the way sentiment and emotion are interpreted: as part of the Sentimenti project, subjects answered the question of what emotion the text shown to them arouses in them – a minimum of 50 different people for each text. Thus, the reader’s (message recipient’s) reception of the text was measured. In the case of generative AI models, we get a “subjective” evaluation of a given language model. Thus, it is closer to trying to guess the intentions of the author of the message (the sender of the message) to evoke certain emotions rather than how that message might be received. Such interpretation is not, in our opinion, suitable for objective determination of emotions and for use in tools using sentiment and emotion measurement.

- hallucinations: a generally known problem of generative models. Unlike the Sentimenti model, focused on the evaluation of 11 emotive indicators, where such a difficulty does not occur and the evaluation of a given text will always give the same result, GenAI can relatively often give an answer that is not true. In our opinion, this precludes the use of GenAI for professional linguistic applications.